For years, enterprise AI conversations have revolved around agents. The autonomous entities that plan, reason, and act. In slide decks and product pitches, the agent is portrayed as a brain: it processes inputs, makes decisions, and produces outputs. But when you peel back the layers of a real system, a different story emerges. The agent is only as powerful as the tools it can call.

The new Agentic AI systems are expected not only to reason but also to execute. Before we talk about tools, let’s clarify what an agent really is and why, at DataNeuron, we believe the toolbook deserves just as much attention as the agent itself.

What an Agent Really Does

An agent handles the thinking and decision-making, while tools handle the doing. Tools perform the actual actions, such as classifying text, scraping websites, sending emails, pulling data from CRMs, or writing into dashboards. Without tools, an agent can process information but can’t take action. In short, the agent decides what needs to be done and when.

From Reasoning to Action

This is where the execution layer comes in. Tools translate an agent’s intent into real-world action. Crucially, the agent doesn’t have to know how each tool works internally; it only needs to know three things:

What the tool does

What input to give it

What output to expect

This clean separation of reasoning (agent) and execution (tools) keeps systems modular, interpretable, and easy to govern. You can upgrade or swap out tools without retraining the agent, catering to what large enterprises need: faster iteration cycles and safer deployments.

A Quick Scenario–Customer Support

Suppose your AI receives the task “analyze complaints and send a summary to the team.” A traditional chatbot would try to handle everything within a single model. An agentic system built on DataNeuron does it differently:

- Fetches customer history from the CRM using an API-based tool.

- Classifies the complaint and extracts order IDs using DataNeuron Native Tools, such as multiclass classifiers and NER.

- Retrieves troubleshooting steps via Structured RAG.

- Summarizes the case with a custom tool configured by your support ops team.

- Sends an acknowledgment using an external mail connector.

The result is an automated pipeline that used to require manual coordination across multiple teams.

Inside the DataNeuron Toolbook

At DataNeuron, we built the Toolbook to make this orchestration simple and scalable. Instead of hand-coding workflows, users can select from a library of pre-built tools or define their own. Everything is callable through standard input/output schemas so that the agent can pick and mix tools without brittle integrations.

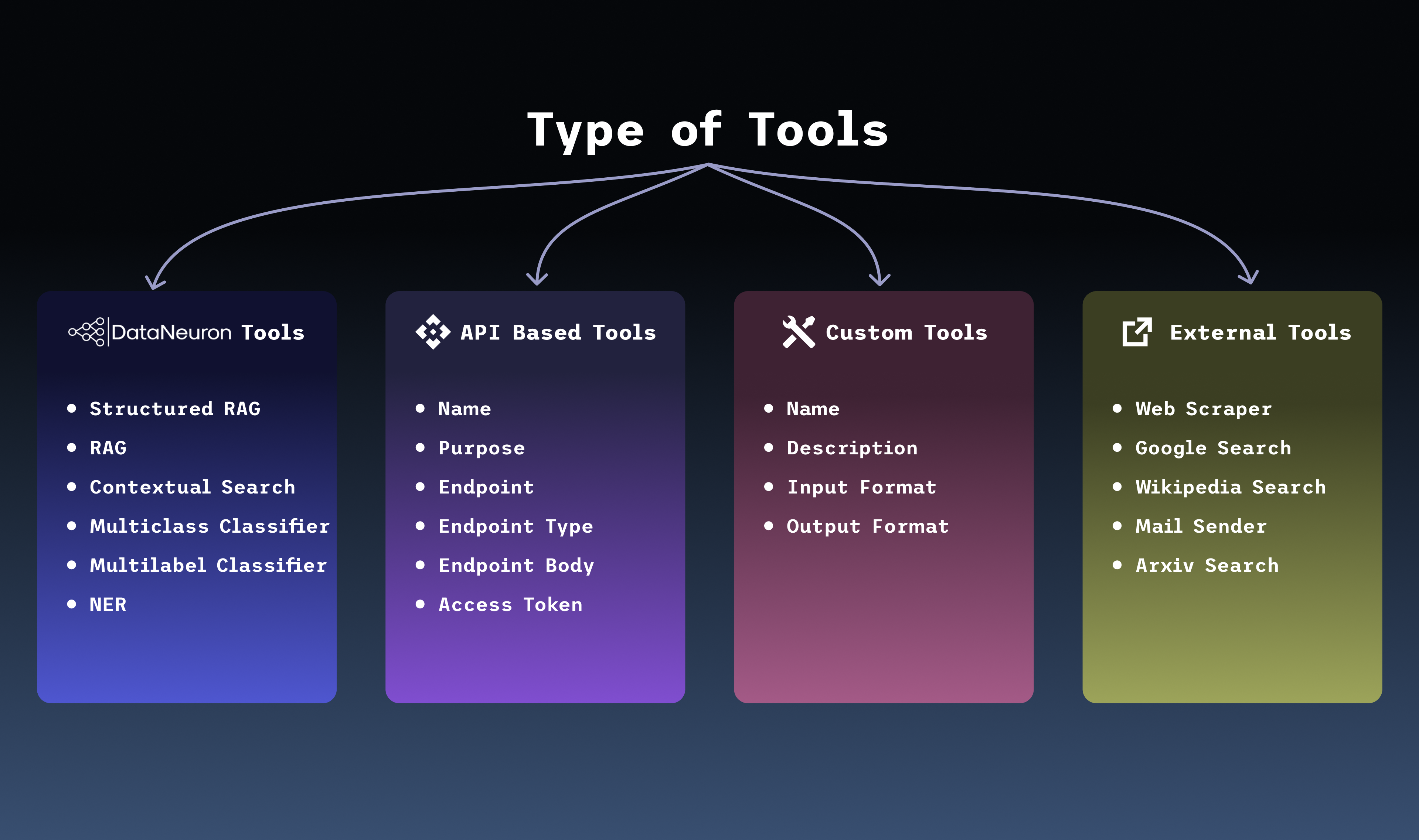

We organize our toolbook into four pillars, each extending the agent’s reach differently.

1. DataNeuron Native Tools

These are our first creation in studio-high-utility, pre-configured tools optimized for AI workflows, often known as the “intelligence primitives” of your agent. They’re ready to call as soon as you deploy an agent:

- Structured RAG (Retrieval-Augmented Generation): Combines document indexing with structured memory, letting agents pull curated data sets in real time. Ideal for regulatory documents, knowledge bases, or customer support manuals.

- Contextual Search: Allows agents to query within a bounded knowledge base, perfect for domain-specific applications like legal, customer service, or biomedical agents.

- Multiclass & Multilabel Classifiers: Let agents tag or categorize inputs, such as sorting customer feedback by sentiment and urgency or routing tickets to the right department.

- Named Entity Recognition (NER): Extracts names, locations, products, and other entities, essential for parsing resumes, contracts, or customer emails.

You don’t code these tools; you configure them. The agent calls them as needed, with predictable inputs and outputs.

2. External Tools

These extend the agent’s reach into the broader digital ecosystem. Think of them as bridges between your agent and the open web or third-party services. Examples include:

- Web Scraper to pull structured data from webpages, prices, job postings, and event schedules.

- Google, Wikipedia, and Arxiv Search for real-time knowledge retrieval, essential for summarizing or validating claims.

- Mail Sender to automate communications, acknowledgments, follow-ups, and onboarding instructions.

With external tools, your agent can enrich its answers, validate facts, and trigger outward-facing actions.

3. Custom Tools

Not every enterprise workflow fits into an off-the-shelf template. That’s why we let you create custom tools by simply defining:

- name (e.g., “SummarizeComplaint”)

- description (“Summarizes customer complaint emails into action items”)

- input/output schema

Based on this metadata, the DataNeuron platform generates a callable tool automatically. This is especially powerful in domains where business logic is unique, such as parsing health insurance claims, configuring automated compliance checks, or running internal analytics.

You define what the tool does, not how it does it, while the system handles the integration.

4. API-Based Tools

These connect agents to external systems or databases, turning your AI from a smart assistant into an operational actor. You define the tool’s:

- Name and purpose

- API endpoint and method

- Auth/token structure

- Request/response format

From there, the platform generates a tool that the agent can call. This enables workflows like:

- Fetching real-time data from a food delivery backend.

- Pushing recommendations into a CRM.

- Triggering marketing campaigns.

API-based tools let agents interact with your production systems securely and at scale.

Let’s consider another scenario of a Digital Health Assistant

To see how these pieces fit together, imagine a hospital deploying a digital health assistant for its doctors. A patient logs in and requests an explanation of their latest blood test report:

- API-Based Tool fetches the patient’s lab results from the hospital’s CRM or EHR database.

- DataNeuron Native Tools (NER + multilabel classifier + Structured RAG) extract key metrics, flag abnormal values, and pull relevant medical guidelines from an internal knowledge base.

- Custom Tool created by the hospital’s analytics team generates a plain-language summary of the patient’s health status and next steps.

- External Tools email the report to the patient and physician, and optionally pull the latest research articles to confirm if the doctor requests supporting evidence.

All of this happens automatically. The agent decides the sequence of actions; each tool performs its specific function. Data is fetched, analyzed, explained, enriched with context, and delivered without the doctor or patient stitching the pieces together manually.

Why This Matters?

Moving from model-first to tool-first thinking turns AI from a smart assistant into an operational actor. Modular tools let agents take sequential actions toward complex goals while giving enterprises governance and flexibility: tools can be audited or swapped without altering the agent’s logic, new capabilities can be added like apps on a phone, and clear input/output schemas simplify security and compliance integration.

The most valuable AI tool in the future won’t be the one that “knows” everything. It will be the one that knows how to get things done, and that’s exactly what the DataNeuron Agentic AI Toolbook is built for.

At DataNeuron, we’re not trying to replace engineers, but giving them a new medium. Workflows can be designed using reusable tools, customized by intent, and executed by agents who know when and why to use them. Instead of one massive, brittle model, you get a living ecosystem where each component can evolve independently.