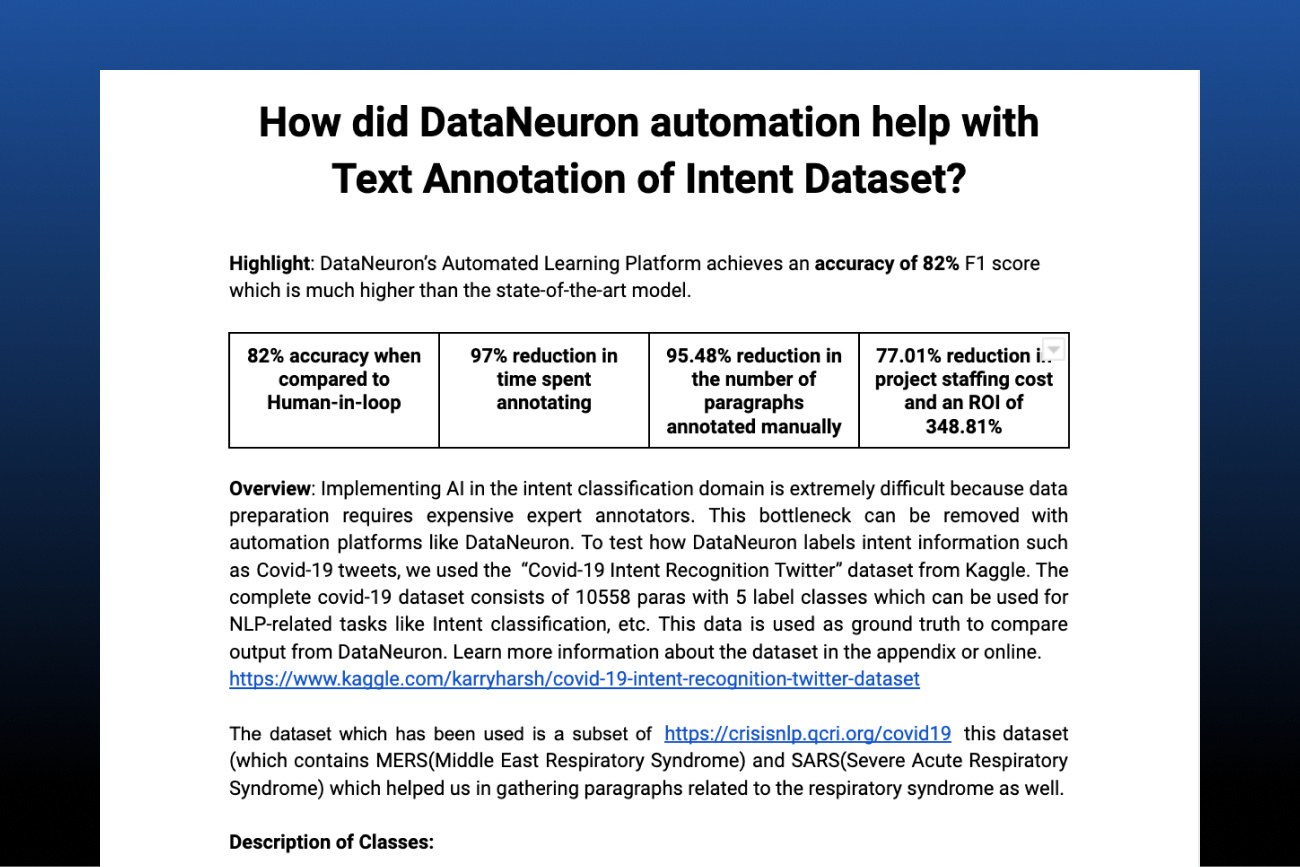

Highlight: DataNeuron’s Automated Learning Platform achieves an accuracy of 82% F1 score which is comparable to the best performing model.

Overview: Because data preparation necessitates the use of expensive expert annotators, implementing AI in the intent classification domain is exceedingly difficult. Automation platforms like DataNeuron can help eliminate this barrier. We used Kaggle’s “Covid-19 Intent Recognition Twitter” dataset to see how DataNeuron identifies intent information like Covid-19 tweets. The entire Covid-19 dataset has 10558 paras divided into 5 classes that can be utilized for NLP tasks such as intent categorization. This data serves as a baseline against which DataNeuron’s output can be compared. More information on the dataset can be found in the appendix or on the internet.

A subset of https://crisisnlp.qcri.org/covid19 was used in this study. This dataset (which includes MERS (Middle East Respiratory Syndrome) and SARS (Severe Acute Respiratory Syndrome)) assisted us in compiling paragraphs on respiratory syndromes.

Class Description:

The masterlist is made up of classes and their associated keywords, which are intuitively written against their classes and serve as inputs for our platform. We train our model on the following classes: disease_signs_or_symptoms, disease_transmission, deaths_reports, prevention, and treatment.

Background on the DataNeuron Automated Learning Platform:

The Automated Learning Platform (ALP) from DataNeuron is designed to ingest unstructured data like these Covid-19 tweets, build AI models with minimal human validation, and predict labels with high accuracy. The diagram below depicts DataNeuron’s ALP Flow

The platform performs automatic annotation and provides the users with a list of annotated paragraphs. The users simply have to validate whether the annotation is correct or incorrect. Instead of making users go through the entire dataset to label paragraphs, DataNeuron offers this validation-based approach which reduces the time taken to annotate each paragraph. Based on our estimate, it takes approximately 30 seconds for a user to identify whether a paragraph belongs to a particular class.

The Covid-19 data was fed into DataNeuron’s ALP flow, where a combination of machine learning models was built based on the input. In the first stage, these models were able to identify irrelevant paragraphs out of the 10558 raw paragraphs. The remaining relevant paragraphs were strategically annotated with one of the 5 target classes. User validation was required on 477 annotated paragraphs to achieve remarkable accuracy.

Simplifying SME Task:

With this same data, SMEs for human-in-loop labeling would have to go through each paragraph in the entire dataset to label with a target class. This exercise would take a tremendous amount of time and effort. DataNeuron’s recognize vs recall approach simplifies the validator’s task to a large extent.

Manual Effort Reduction:

Conservatively assuming 45 seconds are needed to manually annotate each paragraph, it would take 132 hours to manually annotate the complete dataset. Assuming it takes 30 seconds to validate one paragraph on DataNeuron, 477 paragraphs will take 4 hours for complete validation. This calculates to a 97% reduction in human effort required.

Accuracy close to the best classifier model:

In another experiment, the SVM model was used to classify the paragraphs in this dataset. An overall 86.0% Precision at 85% Recall (85% F-1 score) was achieved. With 477 paragraphs for manual validation, which is just 4.51% of the raw data, DataNeuron was able to achieve a comparable F-1 score of 82%.

Calculating the Cost ROI:

The Annotation cost for the in-house data team is $1320 and for the Data-Science team, it is around $1405. Therefore, the total cost is $2725. The cost for annotating 477 paragraphs using the DataNeuron ALP is around $607.3. In this case, the reduction in cost is 77.71%, which is very significant with a cost ROI of 348.81%.

No requirement for a Data Science/Machine Learning Expert:

The DataNeuron ALP by design eliminates prerequisite knowledge of data science or machine learning to utilize the platform to its maximum potential. The only human intervention is to validate the output of the labeled data.

Conclusion:

From the above explanation, it is evident that automating data labeling using DataNeuron provides comparable accuracy with reduced human efforts and cost.