Most failures in production AI systems do not originate from flawed architectures or suboptimal algorithms. They stem from data. As real-world inputs diverge from the data used during training, model performance deteriorates without a precise record of what changed and when, teams are forced into reactive debugging with limited visibility.

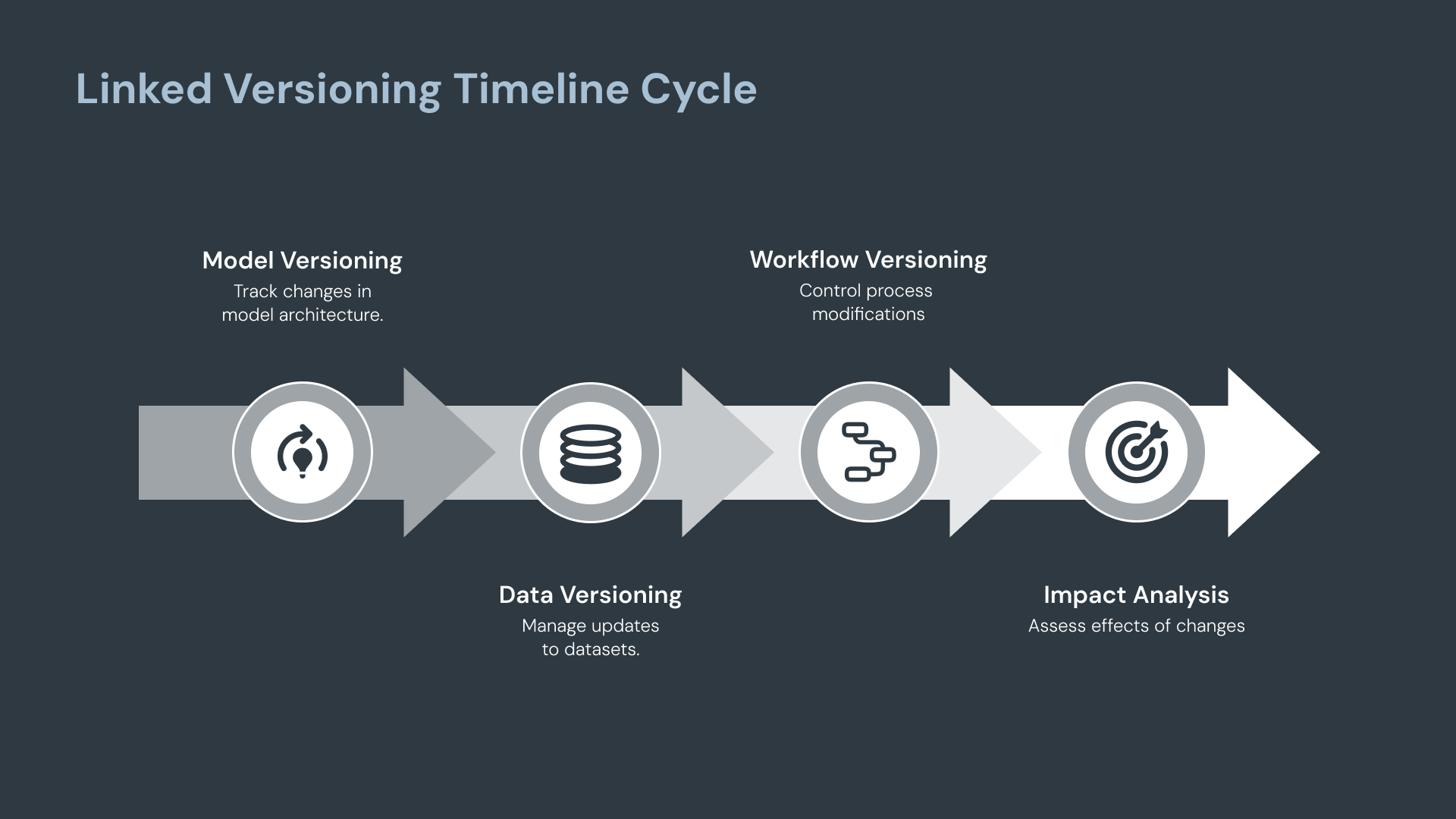

Versioning introduces structure into this complexity. Maintaining a living history of datasets and workflows enables teams to trace changes, compare alternatives, and restore known-good states. With large language models continuously fed by updated corpora, corrected labels, and evolving prompts, versioning has become foundational to reproducibility, traceability, and reliable deployment. In MLOps, this shift is comparable to the transition from ad-hoc scripting to CI/CD in DevOps.

Model Versioning: A Solved Problem, in Isolation

Model versioning is now a well-established practice. Modern MLOps platforms make it straightforward to track trained models, their hyperparameters, and evaluation metrics. Teams routinely rely on these capabilities to:

- Compare architectures and tuning strategies

- Roll back to earlier checkpoints

- Verify which model version was deployed

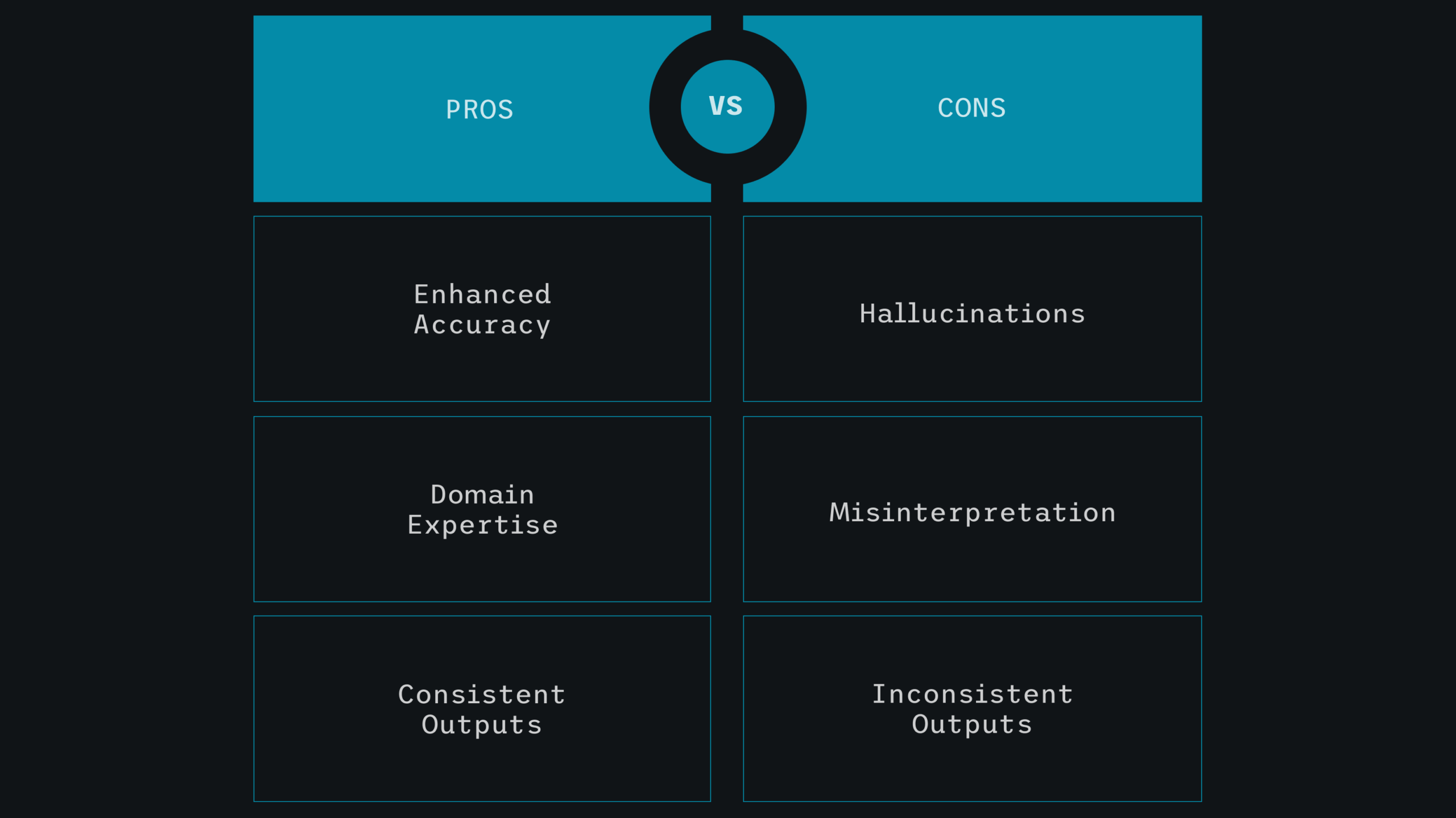

However, model versioning alone provides an incomplete picture. A model trained on Dataset A will behave differently from the same model trained on Dataset B, even if all configurations remain unchanged. Without a clear link between models and the exact data used to train them, reproducibility breaks down.

Data Versioning: The Missing Half of LLM Operations

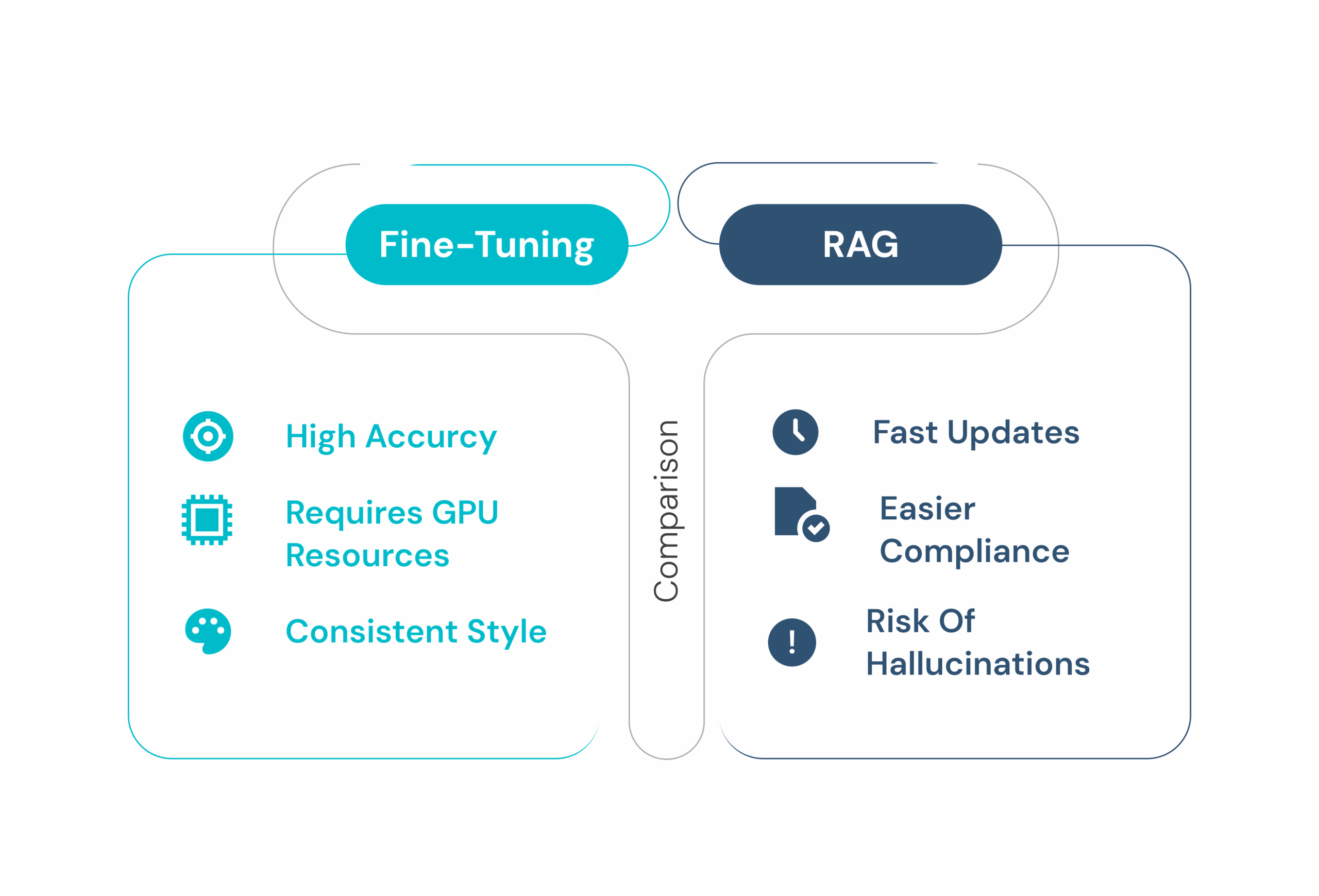

Large language models amplify this problem. LLM performance is tightly coupled to training data composition, ordering, preprocessing, and incremental updates. Fine-tuning the same base model on slightly different datasets can lead to materially different outputs. Hence, effective LLMOps requires treating data versioning with the same rigor as model versioning. This shift is driven by several forces:

1. Regulatory and Audit Requirements

In regulated industries, it is not sufficient to know that a dataset changed. Organizations must know who made the change, when it occurred, and why. Data versioning preserves authorship, timestamps, and contextual metadata for every snapshot, enabling audit-ready workflows.

2. Scaling Unstructured and Semi-Structured Data

LLMs rely on vast volumes of text, documents, logs, and conversational data that change continuously. These inputs cannot be managed manually or tracked reliably without version control.

3. Managing Drift in Long-Lived LLMs

LLMs deployed in production degrade over time as user behavior, language patterns, and knowledge domains evolve. Addressing this drift requires knowing exactly which data version produced the current behavior before introducing updates.

4. Collaboration Across Teams

LLMOps is inherently cross-functional. Data scientists, ML engineers, and platform teams often work on shared datasets and prompts. Versioning prevents accidental overwrites, duplication, and untraceable changes.

Recent advances in tooling have lowered the barrier significantly. What once required custom pipelines and manual bookkeeping can now be integrated directly into production workflows.

Why Model and Data Versioning Must Work Together

In enterprise LLM systems, versioning cannot exist in silos. Teams need unified visibility across models, data, and pipelines. For any deployed model, they must be able to answer:

- Which dataset version was used for fine-tuning?

- Which preprocessing and prompt transformations were applied?

- Which configuration produced the observed behavior?

This linkage transforms LLM development from an experimental process into a reproducible engineering discipline. It also enables transparency. When an output is questioned, teams can trace it back to the exact data snapshot and model configuration that generated it.

A Practical Example from LLM Operations

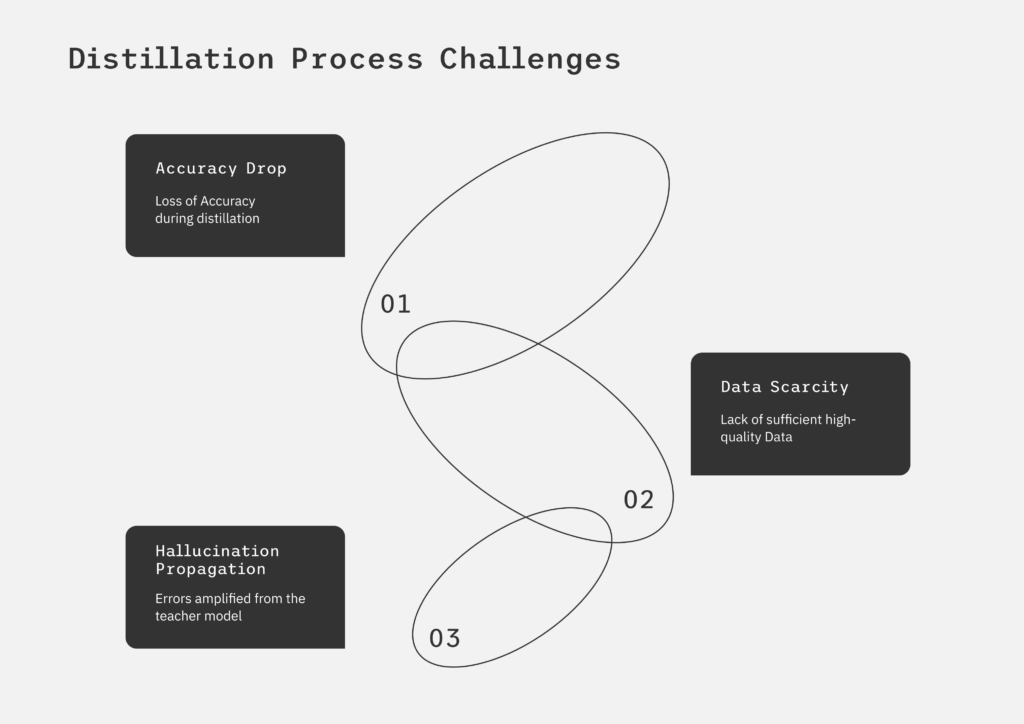

Consider an LLM initially fine-tuned on a curated dataset and deployed into production. Over time, new data becomes available. The fresh documents, updated terminology, and emerging use cases. The model’s performance on newer queries begins to decline.

Without versioning, teams often rebuild the pipeline from scratch, re-ingesting all data and repeating every step. With data versioning in place, the process changes fundamentally. The original dataset can be cloned, new data appended, and fine-tuning resumed from a known state. There is no need to redo the entire workflow, saving both time and computational cost while preserving reproducibility.

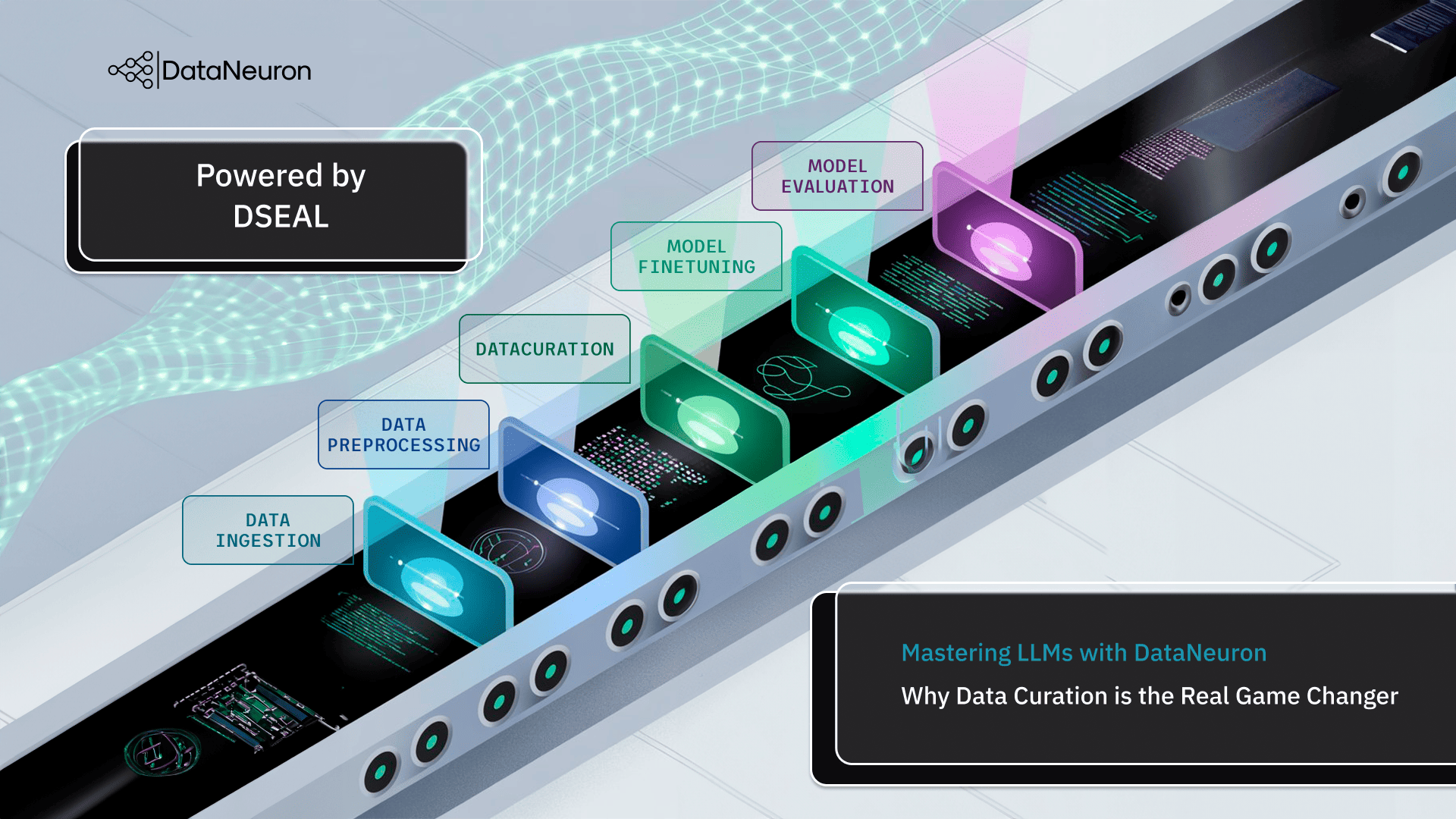

How DataNeuron Extends Versioning Beyond Data

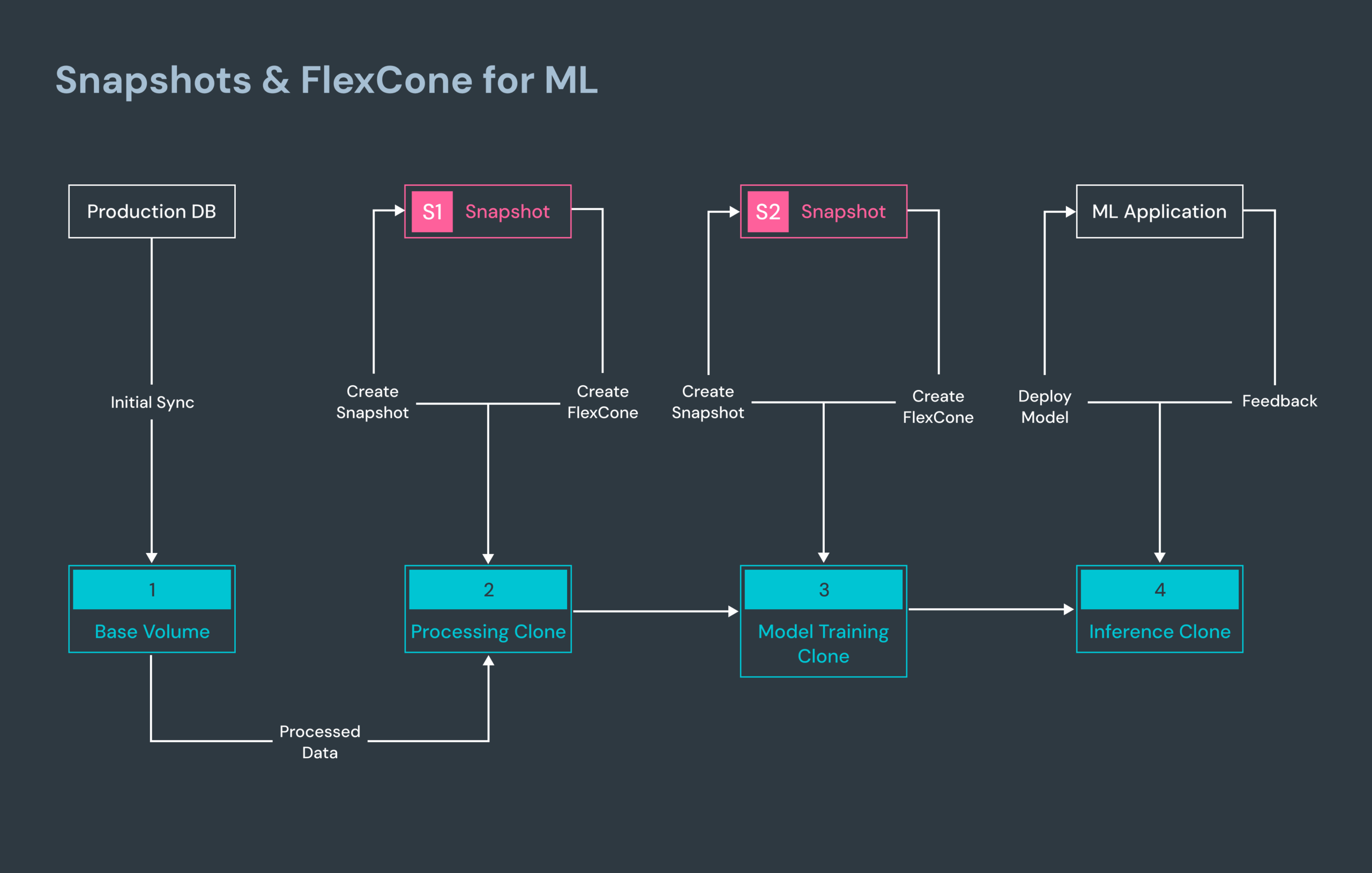

Traditional data versioning focuses on snapshotting datasets. At DataNeuron, we extend this concept to the entire LLM training and fine-tuning workflow.

This approach draws inspiration from enterprise storage systems, where snapshots and clone-based architectures allow teams to create space-efficient copies, roll back to specific points in time, and re-run workloads without re-ingestion. We apply the same principles to LLMOps.

Fork at Any Stage

LLM fine-tuning workflows often span multiple stages, from ingestion and preprocessing to tuning and evaluation. Without versioning, discovering an issue late in the pipeline forces teams to restart from the beginning. With DataNeuron, workflows can be forked at any stage, configurations adjusted, and execution resumed immediately.

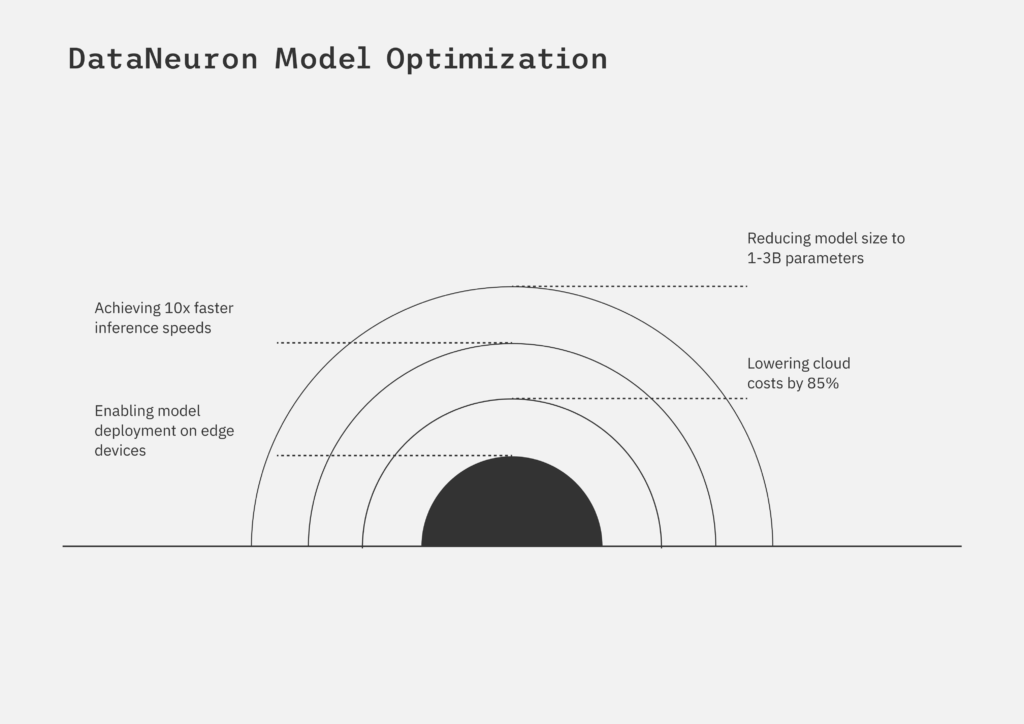

Parallel Versions for Faster Iteration

Teams can maintain multiple datasets and workflow versions in parallel, enabling side-by-side experimentation instead of slow, sequential runs. This dramatically reduces iteration cycles for LLM fine-tuning.

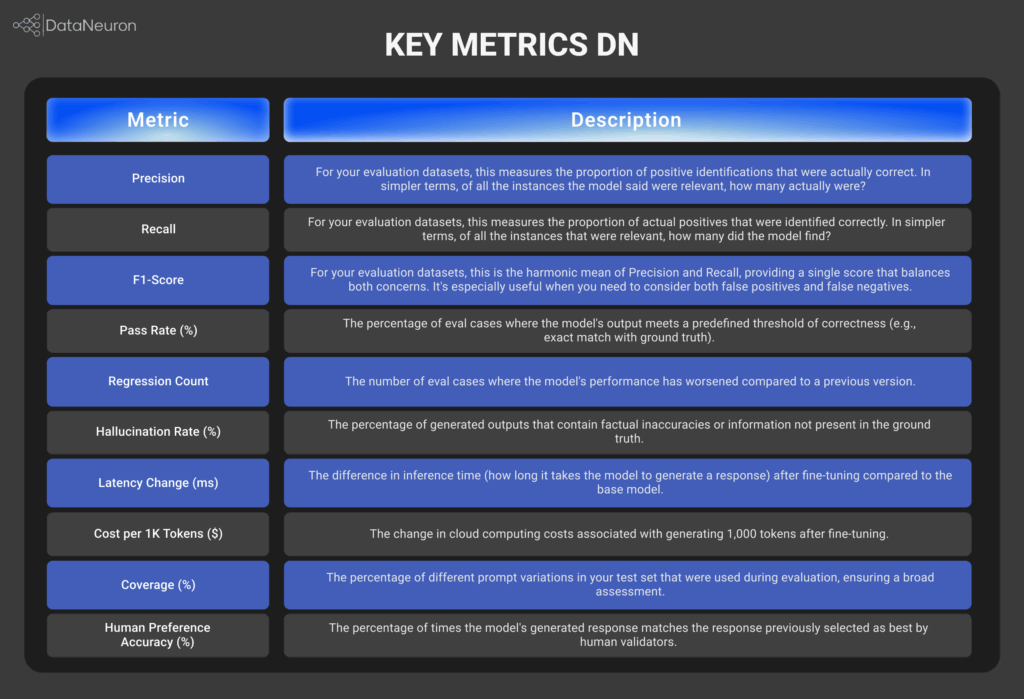

Built-In Benchmarking

Parallel versions allow direct comparison of model responses across the same prompts and queries. Benchmarking becomes part of the workflow, not a separate exercise.

Unified Multi-Version View

DataNeuron’s upcoming interface will allow users to query multiple fine-tuned versions simultaneously and view responses on a single screen. Differences in behavior become immediately visible, enabling faster and more confident deployment decisions.

Why This Matters for Enterprise AI

As enterprises converge DevOps and MLOps, unified versioning across data, models, and pipelines becomes critical. While existing tools have brought data versioning into mainstream adoption, DataNeuron goes further by enabling cloning, forking, and benchmarking designed specifically for LLM-scale workloads.

At scale, the organizations that succeed will be those that can switch between versions effortlessly, compare outcomes intelligently, and roll back confidently. Versioning is no longer an operational detail. It is the backbone of reliable, auditable, and high-velocity LLM deployment.