For years, Retrieval-Augmented Generation (RAG) systems have relied exclusively on text, from extracting, embedding, and generating knowledge purely from written data. That worked well for documents, PDFs, or transcripts. But enterprise data today is far more diverse and complex than plain text.

Think about how information really flows in an organization:

- Engineers exchange dashboards and visual reports.

- The design team shares annotated screenshots.

- Customer support records voice logs.

- Marketing stores campaign videos and infographics.

Each of these contains context that a text-only RAG cannot interpret or retrieve. The system would miss insights locked inside images, audio, or visual reports simply because it only “understands” text.

That’s where multimodal RAG comes into the picture. It allows large language models (LLMs) to retrieve and reason across multiple data formats (text, image, audio, and more) in a unified workflow. Instead of flattening everything into text, multimodal RAG brings together the semantics of different modalities to create more contextual and human-like responses.

How Multimodal RAG Works

At its core, multimodal RAG enhances traditional RAG pipelines by integrating data from multiple modalities into a single retrieval framework. There are two primary approaches that DataNeuron supports:

1. Transform Everything into Text (Text-Centric Multimodal RAG)

In this approach, all data types — whether image, video, or audio are converted into descriptive text before processing.

- Images → converted into captions or alt-text using vision models.

- Audio or video → transcribed into text using speech recognition.

Once everything is transformed into text, the RAG pipeline proceeds as usual:

The text data is chunked, embedded using a text embedding model (OpenAI, etc.), stored in a vector database, and used for retrieval and augmentation during generation.

Advantages:

- Easy to implement and integrates with existing RAG systems.

- Leverages mature text embedding models and infrastructure.

Limitations:

- Some modality-specific context may be lost during text conversion (e.g., image tone, sound quality).

- Requires extra preprocessing and storage overhead.

This method forms the foundation of DataNeuron’s current multimodal pipeline, ensuring a smooth path for teams who want to start experimenting with multimodal inputs without changing their RAG setup.

2. Native Multimodal RAG (Unified Embeddings for Mixed Formats)

The second approach skips the text conversion layer. Instead, it uses embedding models that natively support multiple modalities, meaning they can directly process and represent text, images, and audio together in a shared vector space.

Models like CLIP (Contrastive Language Image Pre-training) and AudioCLIP are examples of this. They learn relationships between modalities. For instance, aligning an image with its caption or an audio clip with its textual label, so that both the image and the text share semantic proximity in vector space.

Advantages:

- With higher accuracy, the original semantic and visual information is preserved.

- Enables advanced search and retrieval (e.g., querying an image database using text, or retrieving audio clips related to a written description).

Limitations:

- Computationally heavier and more complex to fine-tune.

- Fewer mature models are available today compared to text embeddings.

At DataNeuron, we are actively experimenting with both open-source (e.g., OpenCLIP) and enterprise-grade (paid) embedding models to power multimodal RAG. This dual strategy gives users flexibility to balance performance, cost, and deployment preferences.

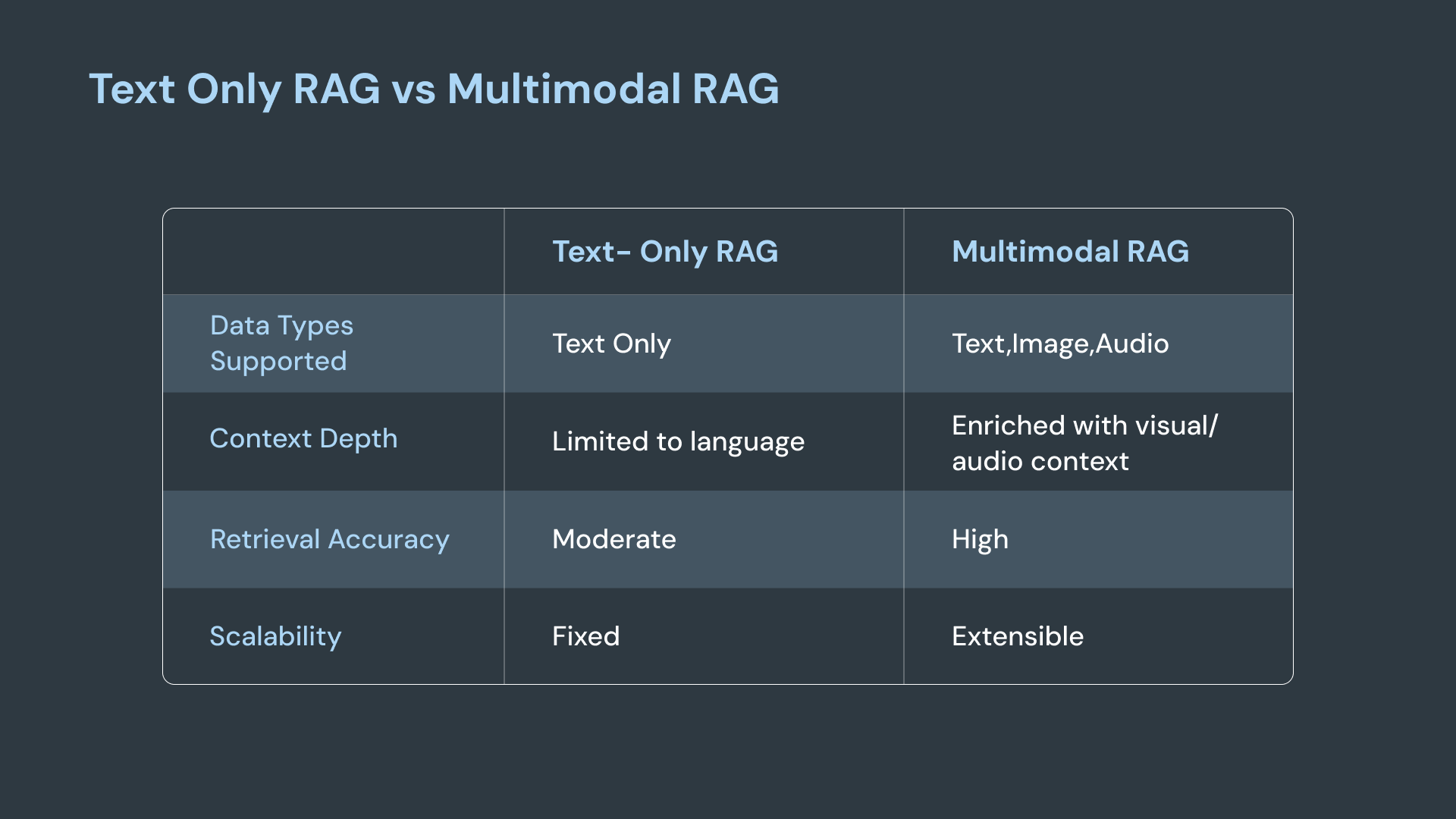

Benefits of Multimodal RAG over Text-Only AI

Transitioning from text-only RAG to multimodal RAG is a shift toward complete context understanding. Here’s how multimodal RAG enhances intelligence across business workflows:

1. Deeper Contextual Retrieval

In text-only RAG, context retrieval depends on written tokens. With multimodal RAG, the system can relate text to associated visuals or audio cues.

For example, instead of returning only a report, a query like “show me the marketing campaign for Q2” can also retrieve the campaign poster, promotional video snippets, or screenshots from the presentation deck, all semantically aligned in one search.

2. Unified Knowledge Base

Multimodal RAG consolidates multiple data silos (PDFs, images, voice logs, infographics) into a single retrieval layer, so teams no longer have to manage separate tools or manual preprocessing. This unified vector store ensures that information from all sources contributes equally to the model’s reasoning.

3. Enhanced Accuracy in Generation

By retrieving semantically linked data across formats, multimodal RAG provides a richer grounding context to LLMs. This leads to more accurate and contextually relevant responses, especially in cases where visual or auditory cues complement text (e.g., summarizing a product design image along with its specs).

4. Scalability Across Data Types

Enterprise data continues to diversify from 3D visuals to real-time sensor logs. A multimodal RAG pipeline is future-ready, capable of adapting to new formats without rebuilding the system from scratch.

5. Operational Efficiency

Rather than running separate AI systems for each data type (text, image, or audio), multimodal RAG centralizes embedding, indexing, and retrieval. This simplifies maintenance, reduces compute duplication, and accelerates development cycles.

Together, these changes make multimodal RAG a natural evolution for enterprise AI platforms like DataNeuron, where knowledge is never just text but a blend of visuals, speech, and data.

Leave a Reply