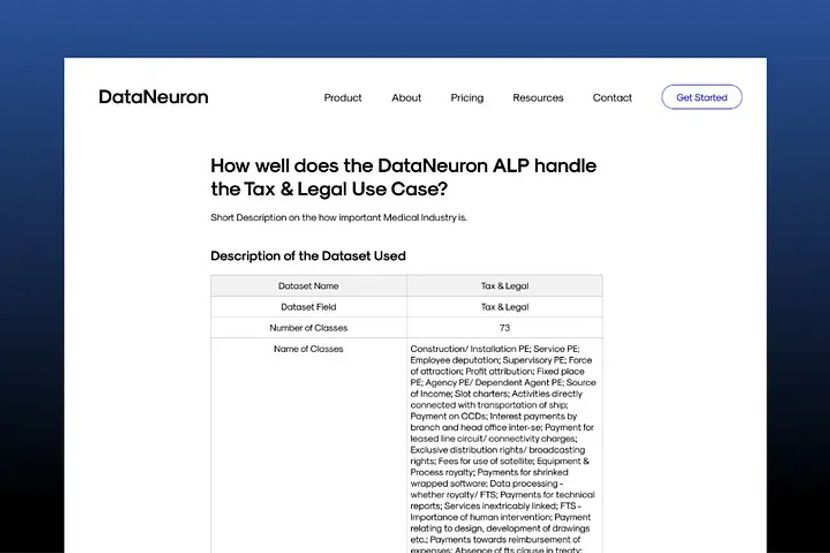

This is the table that explains the dataset that was used to conduct this case study.

Explaining the DataNeuron Pipeline

This is the DataNeuron Pipeline. Ingest, Structure, Validate, Train, Predict, Deploy and Iterate.

Results of our Experiment

Results of our Experiment

Reduction in SME Labelling Effort

During an in-house project, the SMEs have to go through all the paragraphs present in the dataset in order to figure out which paragraphs actually belong to the 73 classes mentioned above. This would usually take a tremendous amount of time and effort.

When using DataNeuron ALP, the algorithm was able to perform strategic annotation on 15000 raw paragraphs and filter out the paragraphs that belonged to the 73 classes and provide 4303 paragraphs to the user for validation. Taking as little as 45 seconds to annotate each paragraph, an in-house project would take an estimate of 187.5 hours just to annotate all the paragraphs while by using DataNeuron, it only took 35.85 hours.

Difference in paragraphs annotated between an in-house solution and DataNeuron.

Advantage of Suggestion-Based Annotation

Instead of making users go through the entire dataset to label paragraphs that belong to a certain class, DataNeuron uses a validation-based approach to make the model training process considerably easier. The platform provides the users with a list of annotated/ labelled paragraphs that are most likely to belong to the same class by using context-based filtering and analysing the masterlist. The users simply have to validate whether the system labelled paragraph belongs to the class mentioned. This validation-based approach also reduces the time it takes to annotate each paragraph. Based on our estimate, it takes approximately 30 seconds for a user to identify whether a paragraph belongs to a particular class. Based on this, it would take an estimate of 35.85 hours for the users to validate 4303 paragraphs provided by the DataNeuron ALP. When compared to the 187.5 hours it would take for an in-house team to complete the annotation process, DataNeuron offers a staggering 81% reduction in time spent.

Difference in time spent annotating between an in-house solution and DataNeuron.

The Accuracy Achieved

When conducting this case study, the accuracy we achieved for the model trained by the DataNeuron ALP was 87% which, considering the high number of classes and small number of training paragraphs, proves to work very well in real world scenarios. The accuracy of the model trained by the DataNeuron ALP can be improved by validating more paragraphs or by adding seed paragraphs.

Calculating the Cost ROI

The number of paragraphs that needs to be annotated in an in-house project is 15000 and it would cost approximately $3280. The number of paragraphs that needs to be annotated when using the DataNeuron ALP is 4303 since most of the paragraphs which did not belong to any of 73 classes were discarded using context-based filtering. The cost for annotating 4303 paragraphs using the DataNeuron ALP is $976.85.

Difference in cost between an in-house solution and DataNeuron.

No Requirement for a Data Science/Machine Learning Expert

The DataNeuron ALP is designed in such a way that no prerequisite knowledge of data science or machine learning is required to utilize the platform to its maximum potential.

For some very specific use cases, a Subject Matter Expert might be required but for the majority of use cases, an SME is not required in the DataNeuron Pipeline.

Leave a Reply